You're trying to draft a tricky email to your boss, and you're stuck. So, you turn to your new AI assistant, pasting in the context of the conversation and asking it to craft the perfect, professional response. A few seconds later, you have a brilliant draft. It's a small moment of digital magic, one that's happening in homes and offices across the country. We ask AI for help with everything from writing a cover letter and planning a vacation to getting advice on personal relationships.

These tools are incredibly helpful, but have you ever stopped to wonder what happens to the information you share? These powerful AI models are constantly learning, but what are they learning from you? The issue became startlingly clear recently when it was discovered that some shared conversations from ChatGPT were accidentally made public and were appearing in search engine results [1]. This wasn't just a minor glitch; it created what we call an "accidental archive"—a permanent, public record of private chats that users never intended to share. This news served as a crucial reminder: our private chats might not be as private as we think.

This article will be your guide to help understanding AI privacy. We'll break down and compare the policies of three of the biggest players in the AI world—OpenAI's ChatGPT, Google's Gemini, and Anthropic's Claude—in plain, simple English. No jargon, no confusing legal language. Just the information you need to make an informed choice about your data.

At Quantum Fiber, we believe our job is about more than just providing a fast, reliable internet connection. We're committed to empowering our customers with the knowledge to help navigate their digital lives safely and confidently. Think of us as your trusted guide to the online world.

Feel free to jump to areas that interest you the most:

- How a private chat becomes a public headline: The “share” feature flaw

- It’s more than just a chat: what you’re sharing with AI

- The privacy showdown: ChatGPT vs. Gemini vs. Claude

- How the top AI platforms handle your data

- The default setting: who uses your chats to get smarter?

- Where do your conversations go?

- Taking back control of your data

- Making the best choice for your digital privacy

- Your digital life, your rules

How a private chat becomes a public headline: The "share" feature flaw

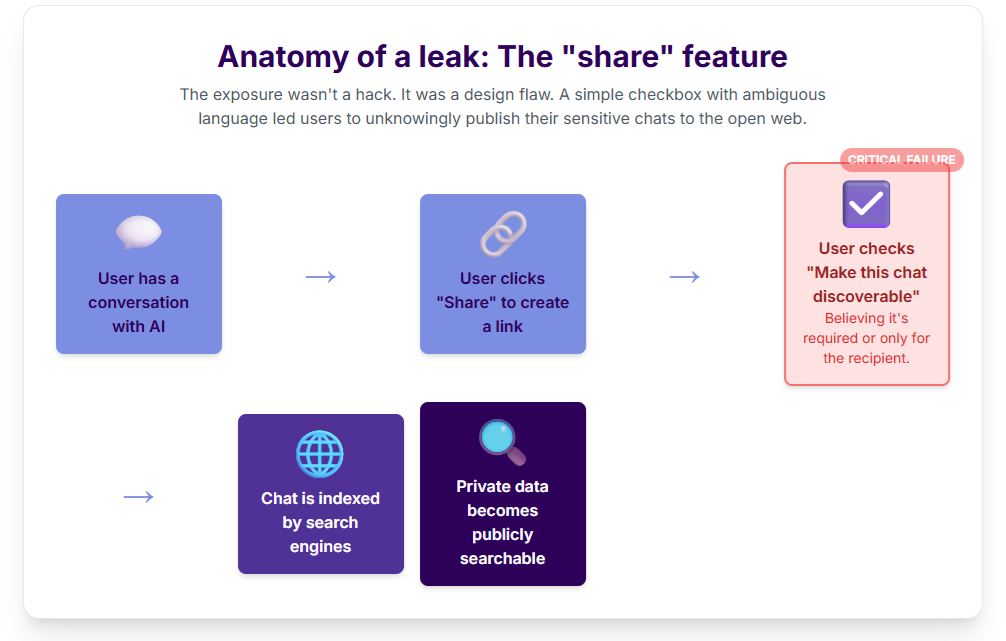

So how did this happen? The exposure wasn't the result of a sophisticated hack. It was a two-part failure, starting with a design flaw and ending with a misunderstanding of how the internet works.

It all began with a feature intended for convenience. When users wanted to share a conversation, they could create a link. However, the pop-up window for this feature included a small, easy-to-miss checkbox that said, "Make this chat discoverable." Many users either didn't notice it, or the ambiguous wording led them to believe it was a necessary step to share the link with a specific person. In reality, checking that box gave search engines permission to index the conversation.

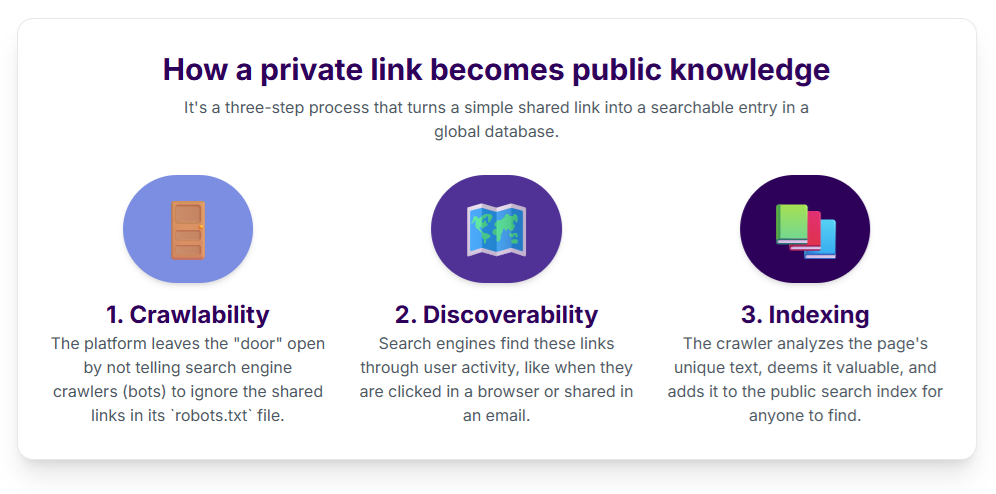

This user-facing design flaw then triggered a technical chain reaction:

This simple process turned a convenience feature into a major privacy failure, turning private chats into public webpages.

It's more than just a chat: what you're sharing with AI

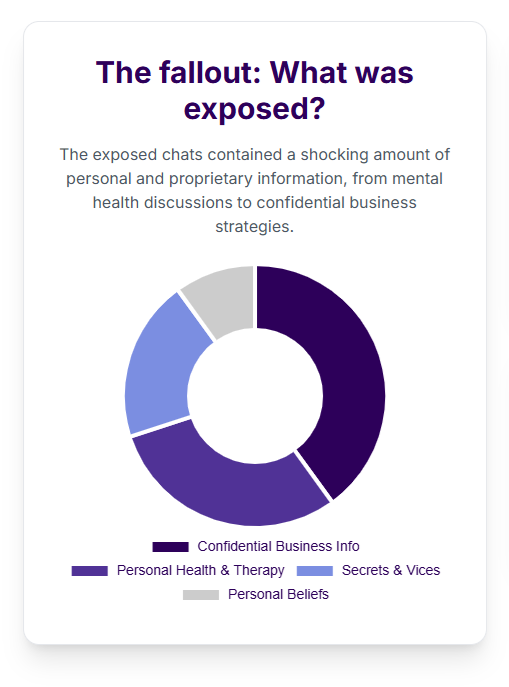

Every time you interact with an AI, you're creating a data point. The infographic highlights the shocking reality of what was exposed in these leaks. It wasn't just harmless chatter; it was deeply personal and confidential information. Consider what you might discuss with an AI:

- Personally identifiable information: You might accidentally (or intentionally) include your name, email address, phone number, or even your home address in a conversation.

- Sensitive personal details: Many people use AI as a sounding board for personal issues. The exposed chats revealed that a large portion of this data consisted of discussions about personal health, therapy sessions, and private beliefs.

- Proprietary or confidential information: If you use AI for work, you could be pasting in company data. The single largest category of exposed data was confidential business information, including project ideas, client information, and internal strategies.

So, where does all this information go? Most of the time, it's used for "AI training."

Think of an AI model as a student who is constantly studying to get smarter. To learn how to write, talk, and reason like a human, it needs to read and analyze a massive amount of text. This "textbook" is often made up of data from the internet, books, and—you guessed it—user conversations. By default, many platforms use your chats to help refine their AI's performance, fix its mistakes, and make it more helpful in the future. While companies say this data is anonymized, the recent news has shown that leaks can happen, and "anonymized" data can sometimes be traced back to individuals.

This incident highlighted that not all AI platforms treat your privacy the same way, which brings us to the crucial differences in their policies.

The privacy showdown: ChatGPT vs. Gemini vs. Claude

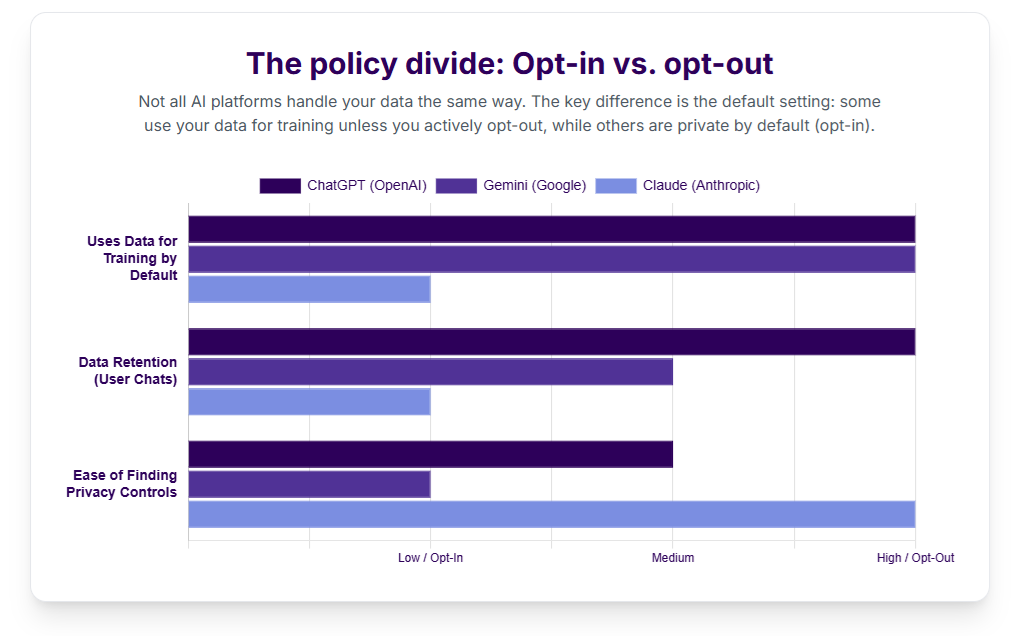

Now, let's get down to the specifics. Not all AI platforms treat your data the same way. The differences in their philosophies and default settings are significant, as visualized in the infographic's comparison chart. We're going to compare them on three key points: how they use your data for training, how long they hold onto it, and how easy it is for you to find and change your privacy settings.

How the top AI platforms handle your data

To make it simple, here is a quick comparison table that breaks down the most important differences.

| Feature | ChatGPT (Open AI) |

Gemini (Google) |

Claude (Anthropic) |

| Uses your data for training by default? | Yes | Yes | Yes |

| How to stop training on your data? | Must opt-out in settings | Must opt-out in settings | Opt-in by default |

| Data retention period | Indefinite, unless deleted | Up to 3 years, unless deleted | 90 days for flagged content, otherwise shorter and not used for training |

| Ease of finding privacy controls | Moderate | Moderate | Easy |

Let's dive a little deeper into what these differences mean for you.

The default setting: who uses your chats to get smarter?

This is arguably the most important distinction between the three services. The core of the issue comes down to two different approaches: "opt-out" versus "opt-in."

- Opt-out (the default for ChatGPT and Gemini): Think of this as being automatically enrolled in a program. When you use ChatGPT or Gemini, you are, by default, giving them permission to use your conversations to train their models. You are "in" unless you take action to get "out." To stop them from using your data, you have to navigate to your settings and find the specific toggle to turn this feature off. Many users are not even aware this setting exists.

- Opt-in (the default for Claude): This approach is the complete opposite and is what makes Claude stand out from a privacy perspective. It treats your data as private by default. Claude will not use your conversations to train its models unless you find a setting and explicitly give it permission to do so. You are "out" by default and must take action to get "in." This "privacy-first" design means you can use the service with a much higher degree of confidence that your conversations will remain private.

For the everyday user, this single difference is crucial. If you value your privacy and don't want to worry about your conversations being used for training, Claude's opt-in model is a significant advantage.

Where do your conversations go?

The next important question is how long these companies store your chat history.

- ChatGPT and Gemini: Both of these services are designed to remember your past conversations. This is a useful feature, as it allows you to go back and reference previous chats. However, it also means your data is stored on their servers indefinitely unless you take the step to manually delete individual conversations or your entire history. Google states it keeps Gemini activity for up to 36 months, though you can change this setting.

- Claude: Anthropic has a different policy. They state that they only retain conversations for a short period to provide the service and will only hold onto any chats that are flagged for trust and safety reviews for up to 90 days. Since they don't use your data for training by default, their need to store it long-term is greatly reduced. This commitment to data minimization is another key aspect of their privacy-focused approach.

Taking back control of your data

Knowing you can change your settings is one thing. Being able to easily find them is another. How do these platforms stack up when it comes to user control?

- ChatGPT: OpenAI has made improvements here. You can find the data controls by clicking on your name in the bottom-left corner and going to Settings > Data Controls. From there, you can turn off "Chat history & training." It's fairly straightforward once you know where to look.

- Gemini: As a Google product, your Gemini privacy settings are tied to your main Google Account. You need to go to your "Gemini Apps Activity" page. From there, you can see your history and turn off data collection. Because it's part of the larger Google ecosystem, it can sometimes feel a bit buried for users who are just focused on the Gemini interface itself.

- Claude: Given their privacy-first stance, Anthropic is very transparent. Their privacy policy is written in clear, easy-to-understand language, and because you don't need to opt-out of training, there are fewer settings to worry about. The focus is on simplicity and clarity.

Making the best choice for your digital privacy

After looking at the details, it's clear that your choice of AI assistant can have a real impact on your digital privacy. There is no single "best" AI for everyone; the right choice depends on your personal comfort level and how you plan to use the tool.

Here's a simple way to think about it:

- If your priority is convenience and features ... ChatGPT and Gemini are incredibly powerful and are integrated into a wide range of other applications. They are excellent tools, but they do place the responsibility on you to manage your privacy. If you choose to use them, make it a habit to go into your settings and opt-out of data training if you are discussing anything remotely sensitive.

- If your priority is privacy ... Claude is the clear choice. Its opt-in model for data training means you can be confident that your conversations are not being used to train the AI unless you explicitly agree. It's designed for users who want powerful AI assistance without having to worry about their data being used in the background.

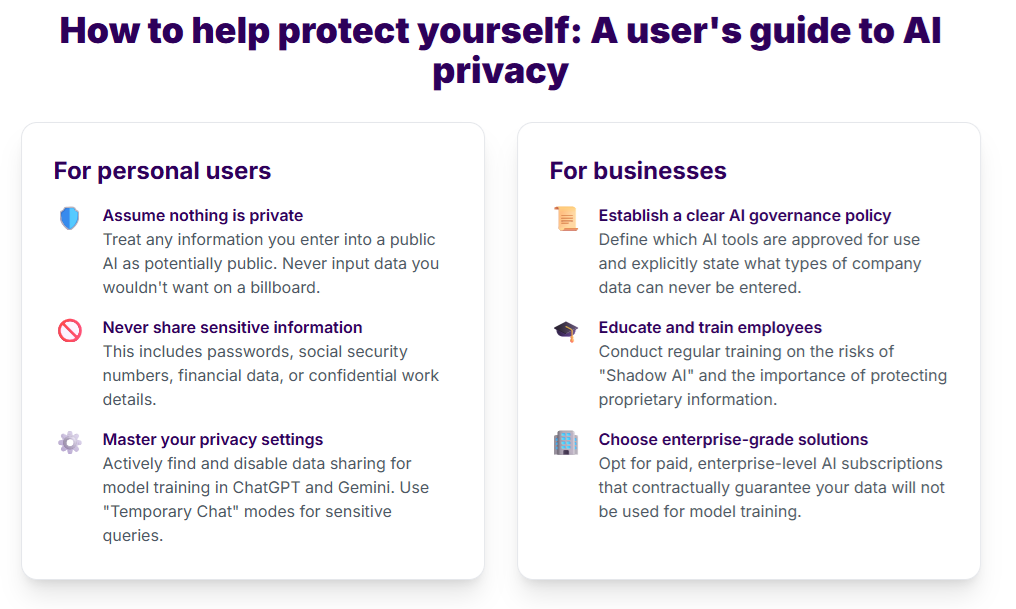

Ultimately, the power is in your hands. The goal of this article isn't to scare you away from using AI, but to empower you. By understanding how these platforms work, you can choose the one that best aligns with your values and take the necessary steps to protect your information. Being informed is the most important step you can take toward digital safety.

Your digital life, your rules

The world of artificial intelligence is evolving at a dizzying pace, and it's bringing incredible new tools into our lives. But as we embrace this new technology, we must remain vigilant about our privacy. The key takeaway is simple: not all AI platforms are created equal. Understanding the fundamental difference between an opt-in and an opt-out privacy model is the first and most critical step in protecting your personal information.

At Quantum Fiber, we're passionate about providing you with a fast and reliable connection that opens up the entire digital world. But our commitment to you doesn't stop there. We believe in empowering our customers to use that connection safely, smartly, and with confidence. Your online experience is important to us, and we'll continue to provide you with the information you need to help stay in control of your digital life.

We encourage you to take a moment today to check the privacy settings on any AI tools you use. And if you found this article helpful, please share it with friends and family. In our connected world, a little bit of knowledge can go a long way.

References

[1] Search Engine Land. (2024, July 31). Your ChatGPT conversations may be visible in Google Search. Search Engine Land. Retrieved August 19, 2024

Content Disclaimer - All content is for informational purposes only, may require user’s additional research, and is provided “as is” without any warranty, condition of any kind (express or implied), or guarantee of outcome or results. Use of this content is at user’s own risk. All third-party company and product or service names referenced in this article are for identification purposes only and do not imply endorsement or affiliation with Quantum Fiber. If Quantum Fiber products and offerings are referenced in the content, they are accurate as of the date of issue. Quantum Fiber services are not available everywhere. Quantum Fiber service usually means 100% fiber-optic network to your location but, in limited circumstances, Quantum Fiber may need to deploy alternative technologies coupled with a non-fiber connection from a certain point (usually the curb) to your location in order to provide the advertised download speeds. ©2026 Q Fiber, LLC. All Rights Reserved. Quantum, Quantum Fiber and Quantum Fiber Internet are trademarks of Quantum Wireless LLC and used under license to Q Fiber, LLC.